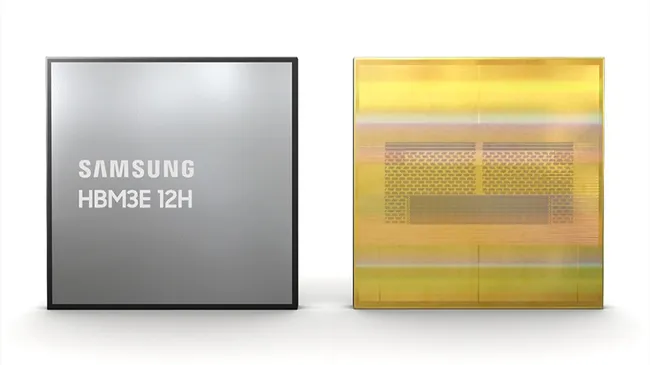

Samsung’s HBM3E 12H Breakthrough: A Game-Changer in High-Capacity Memory Race

In a groundbreaking development, Samsung Electronics has announced the successful creation of the industry’s first 12-stack HBM3E 12H DRAM, positioning itself ahead of Micron Technology and potentially reshaping the landscape for Nvidia’s next-generation AI cards.

Setting New Standards in Memory Technology

Samsung’s HBM3E 12H boasts an impressive bandwidth of up to 1,280GB/s and an industry-leading capacity of 36GB, marking a remarkable 50% improvement over the previous 8-stack HBM3 8H. This significant leap is attributed to the adoption of advanced thermal compression non-conductive film (TC NCF) in the 12-stack design. This innovation enables meeting HBM package requirements while maintaining the height specifications of 8-layer counterparts, resulting in a 20% increase in vertical density compared to Samsung’s HBM3 8H product.

The Industry’s Demand for Higher Capacity

Yongcheol Bae, Executive Vice President of Memory Product Planning at Samsung Electronics, highlighted, “The industry’s AI service providers are increasingly requiring HBM with higher capacity, and our new HBM3E 12H product has been designed to answer that need.” This development reflects Samsung’s commitment to advancing core technologies for high-stack HBM and asserting technological leadership in the high-capacity HBM market.

Battle in the AI Arena

While Micron Technology initiates mass production of its 24GB 8H HBM3E, tailored for Nvidia’s latest H200 Tensor Core GPUs, Samsung’s 36GB HBM3E 12H outperforms Micron’s offering in both capacity and bandwidth. Despite missing out on Nvidia’s most expensive artificial intelligence card, Samsung’s memory technology positions itself as the prime choice for upcoming systems, including the highly anticipated Nvidia B100 Blackwell AI powerhouse expected later this year.

Sampling and Production Timelines

Samsung has already commenced sampling its 36GB HBM3E 12H to customers, with mass production scheduled to begin in the first half of this year. Meanwhile, Micron is gearing up to ship its 24GB 8H HBM3E in the second quarter of 2024. The intensifying competition between these tech giants in the HBM market is expected to fuel innovation and meet the escalating demand for high-capacity memory solutions in the era of artificial intelligence.